In this blog I will describe how a large bank was able to scale a multi-geographical deployment on top of Apache Ignite™ (incubating) In-Memory Data Grid.

Imagine a bank offering variety of services to its customers. The customers of the bank are located in different geo-zones (regions), and most of the operations performed by a customer are zone-local, like ATM withdrawals or bill payments. Zone-local operations are very frequent and need to be processed very quickly.

However, some operations, such as wire transfers for example, may affect customers across different zones. Cross-zone operations are not as frequent, but nevertheless need to be supported in a transactional fashion as well.

Also we should take into account that different zones may require different processing power. Some over-populated zones, like New York or Chicago for example, will have a much higher volume of bank transactions than other less populated areas.

In order to process customer transactions fast, we need to make sure that we access all the data in-memory without touching the disk at all. Also, we need to make sure that we can horizontally scale the system by adding more servers to different geographical zones as the load within a zone increases.

Let us summarize the requirements:

Using in-memory data grids seems like a natural choice. Most of in-memory data grids would support distributed transactional in-memory caches for customer data, however, not every data grid would allow a proper physical separation for different geographical zones.

Let's analyze several different approaches.

In this approach, all the customer data from all the geographical zones is equally distributed within the cluster without any zone separation.

This solution was discarded immediately as it does not provide any way to scale individual zones separately from each other.

In this approach, all available machines are divided into Z (zone count) independent clusters, and each zone is deployed in a separate cluster with a separate data grid.

This solution was also discarded, as with separate disjoint clusters for each zone, cross-zone operations become very difficult to handle. Since different clusters do not know about each other, user would need to implement cross-zone transactions manually, which is a very non-trivial problem to solve and does not seem to be worth the effort.

The actual solution was a combination of the first 2 approaches. Essentially, each zone would be stored in its own in-memory cache within the same cluster. However, each cache should be deployed on its own non-intersecting group of servers from the same cluster.

Turns out that Apache Ignite was the only data grid that allowed defining different server topologies for different caches within the same cluster. Also by using Apache Ignite cross-cache transaction support, transactions across different caches (hence different zones) were also possible out-of-the box.

This approach was chosen as the winner since it allowed for collocating and scaling different geographical zones separately from each other in a very efficient way, while maintaining the ability to perform cross-zone transactions without extra coding effort.

For more information on Apache Ignite or some pretty cool screencasts, please visit the project website.

Reprinted with permission from http://onemoregeek2000.blogspot.com

Problem Definition

Imagine a bank offering variety of services to its customers. The customers of the bank are located in different geo-zones (regions), and most of the operations performed by a customer are zone-local, like ATM withdrawals or bill payments. Zone-local operations are very frequent and need to be processed very quickly.

However, some operations, such as wire transfers for example, may affect customers across different zones. Cross-zone operations are not as frequent, but nevertheless need to be supported in a transactional fashion as well.

Also we should take into account that different zones may require different processing power. Some over-populated zones, like New York or Chicago for example, will have a much higher volume of bank transactions than other less populated areas.

Let's define our problem as the ability to store, deploy, and scale different geographical zones separately from each other, while yet maintaining the ability to transact, compute, and query across multiple zones at the same time.

Solution

In order to process customer transactions fast, we need to make sure that we access all the data in-memory without touching the disk at all. Also, we need to make sure that we can horizontally scale the system by adding more servers to different geographical zones as the load within a zone increases.

Let us summarize the requirements:

- we have N machines in the cluster

- we have Z geographical zones

- number of customers in different zones can vary

- each zone must be able to scale out independently from other zones

Using in-memory data grids seems like a natural choice. Most of in-memory data grids would support distributed transactional in-memory caches for customer data, however, not every data grid would allow a proper physical separation for different geographical zones.

Let's analyze several different approaches.

Approach 1: No Zone Separation

In this approach, all the customer data from all the geographical zones is equally distributed within the cluster without any zone separation.

BAD:

This solution was discarded immediately as it does not provide any way to scale individual zones separately from each other.

Approach 2: Separate Cluster for each Zone

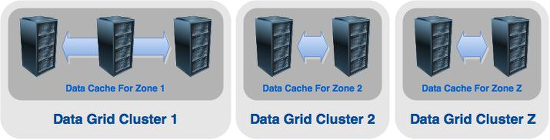

In this approach, all available machines are divided into Z (zone count) independent clusters, and each zone is deployed in a separate cluster with a separate data grid.

BAD:

This solution was also discarded, as with separate disjoint clusters for each zone, cross-zone operations become very difficult to handle. Since different clusters do not know about each other, user would need to implement cross-zone transactions manually, which is a very non-trivial problem to solve and does not seem to be worth the effort.

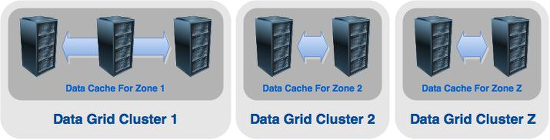

Approach 3: Single Cluster With Separate Zones

The actual solution was a combination of the first 2 approaches. Essentially, each zone would be stored in its own in-memory cache within the same cluster. However, each cache should be deployed on its own non-intersecting group of servers from the same cluster.

Turns out that Apache Ignite was the only data grid that allowed defining different server topologies for different caches within the same cluster. Also by using Apache Ignite cross-cache transaction support, transactions across different caches (hence different zones) were also possible out-of-the box.

WINNER:

This approach was chosen as the winner since it allowed for collocating and scaling different geographical zones separately from each other in a very efficient way, while maintaining the ability to perform cross-zone transactions without extra coding effort.

For more information on Apache Ignite or some pretty cool screencasts, please visit the project website.

Reprinted with permission from http://onemoregeek2000.blogspot.com