Over the past few months I’ve been repeatedly asked on how GridGain relates to Hadoop. Having been answering this questions over and over again I’ve compacted it to just few words:

We love Hadoop HDFS, but we are sorry for people who have to use Hadoop MapReduce.

Let me explain.

Hadoop HDFS

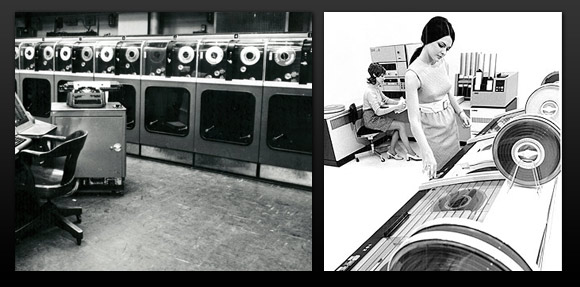

We love Hadoop HDFS. It is a new and improved version of enterprise tape drive. It is an excellent technology for storing historically large data sets (TB and PB scale) in a distributed disk-based storage. Essentially, every computer in Hadoop cluster contributes portion of its disk(s) to Hadoop HDFS and you have a unified view on this large virtual file system.

It has its shortcomings too like slow performance, complexity of ETL, inability to update the file that’s already been written or inability to deal effectively with small files - but some of them are expected and project is still in development so some of these issues will be mitigated in the future. Still - today HDFS is probably the most economical way to keep very large static data set of TB and PB scale in distributed file system for a long term storage.

GridGain provides several integration points for HDFS like dedicated loader and cache loaders. Dedicated data loader allows data to be bulk-loaded into In-Memory Data Grid while cache loader allows for much more fine grained transactional loading and storing of data to and from HDFS.

Many clients using GridGain with HDFS is a good litmus test for that integration.

Hadoop MapReduce

As much as we like Hadoop HDFS we think Hadoop’s implementation of MapReduce processing is inadequate and outdated:

Would you run your analytics today off the tape drives? That’s what you do when you use Hadoop MapReduce.

The fundamental flaw in Hadoop MapReduce is an assumption that a) storing data and b) acting upon data should be based off the same underlying storage.

Hadoop MapReduce runs jobs over the data stored in HDFS and thus inherits, and even amplifies, all the shortcomings of HDFS. Extremely slow performance, disk-based storage that leads to heavy batch orientations which in turn leads to inability to effectively process low latency tasks... which ultimately makes Hadoop MapReduce an "elephant in the room" when it comes to inability to deliver real time big data processing.

Yet one of the most glaring shortcomings of Hadoop MapReduce is that you’ll never be able to run your jobs over the live data. HDFS by definition requires some sort of ETL process to load data from traditional online/transactional (i.e. OLTP) systems into HDFS. By the time the data is loaded (hours if not days later) - the very data you are going to run your jobs over is... stale or frankly dead.

GridGain

GridGain’s MapReduce implementation addresses many of these problems. We keep both highly transactional and unstructured data smartly cached in extremely scalable In-Memory Data Grid and provide industry first fully integrated In-Memory Compute Grid that allows to run MapReduce or Distributed SQL/Lucene queries over the data in memory.

Having both data and computations co-located in memory makes low latency or streaming processing simple.

You, of course, can still keep any data for a long term storage in underlying SQL, ERP or Hadoop HDFS storages when using GridGain - and GridGain intelligently supports any type of long terms storage.

Yet GridGain doesn’t force you to use the same storage for processing data - we are giving you the choice to use the best of two worlds: keep data in memory for processing, and keep data in HDFS for long term storage.

http://vimeo.com/39065963