Apache Cassandra is one of the leading open-source distributed NoSQL disk databases. It is deployed in mission-critical infrastructures at Netflix, eBay, Expedia and many others. It gained popularity for its speed, the ability to linearly scale to thousands of nodes and offers "best-in-class" replication between different data centers.

Apache Ignite is a memory-centric distributed database, caching, and processing platform for transactional, analytical, and streaming workloads delivering in-memory speeds at petabyte scale with support for JCache, SQL99, ACID transactions, and machine learning.

Apache Cassandra is a classic solution in its field. As with any specialized solution, its advantages are achieved due to some compromises, a significant part of which is caused by limitations of disk storage. Cassandra is optimized for the fastest possible work with them to the detriment of the rest.

Examples of trade-offs: the lack of ACID-transactions and SQL support, the inability of arbitrary transactional and analytical transactions -- if the data is not adapted to them in advance. These compromises, in turn, cause logical difficulties for users, leading to the incorrect use of the product and negative experience, or forcing data to be shared between different types of storage, fragmenting the infrastructure and complicating the logic of data storage in applications.

A full comparison of Apache Ignite and Cassandra is outlined in the articles below. Go there if you want to learn more about trade-offs and specificities of each database:

- Apache Cassandra or Apache Ignite? Thoughts on a simplified architecture

- Apache Cassandra vs. Apache Ignite: Affinity Collocation and Distributed SQL

- Apache Cassandra vs. Apache Ignite: Strong Consistency and Transactions

However, as active Cassandra users, can we use it in conjunction with Apache Ignite? Keeping in mind that, the aim is to preserve existing deployments of Cassandra and address its limitations with Ignite. The answer is -- yes. We can deploy Ignite as an in-memory layer above Cassandra, and this article shows how to do this.

Cassandra Restrictions

First, I want to briefly go through the main limitations of Cassandra, which we want to mitigate:

1. Bandwidth and response time are limited by the characteristics of the hard disk or solid state drive;

2. A data storage structure is optimized for sequential writing and reading and is not adapted to the optimal performance of classical relational operations on data. This does not allow you to normalize data and efficiently map using JOINs, and also imposes significant restrictions, for example, on commands such as GROUP BY and ORDER;

3. As a consequence of paragraph 2 - the lack of SQL support in favor of its more limited variation - CQL;

4. The absence of ACID-transactions.

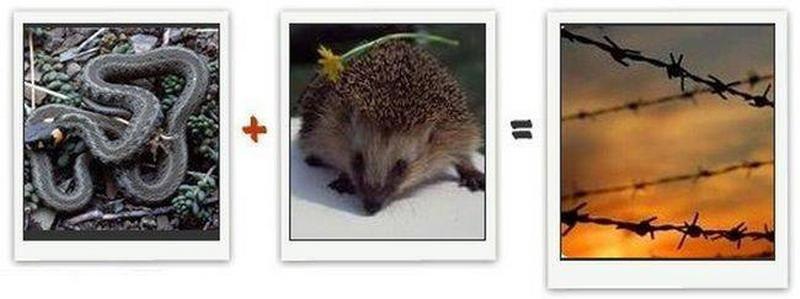

One can argue that here we are trying to use Cassandra for other purposes, and I would agree. My goal is to show that if you solve these problems, the "appointment" of Cassandra can be significantly expanded. By combining man and horse, we get a rider who can already have a completely different list of things than man and horse individually.

How can you circumvent these limitations?

I would say that the classic approach is fragmentation of data when a part of it is stored in Cassandra while the other is located in different systems that provide guarantees Cassandra can't deliver.

The drawback of this approach is increased complexity (and therefore, potentially, the deterioration of speed and quality) and cost of maintenance. Instead of using one system as data storage now applications have to combine and process results from various sources. Also, the degradation of either of the systems can lead to significant negative consequences, forcing the infrastructural team to chase two rabbits.

Apache Ignite as In-Memory Layer

Another way is to put another system on top of Cassandra, dividing the responsibility between them where Ignite can serve the following:

1. The performance limitations imposed by the disk disappear: Apache Ignite can fully operate in RAM. It's one of the fastest and cheapest storages available!

2. Full support for standard SQL99, including JOINs, GROUP BY, ORDER BY, as well as DML, allows to normalize data, facilitate analytics, and take into account performance when working with RAM - opens the potential of HTAP, analytics in real time on operational data;

3. Support for JDBC and ODBC standards makes it easier to integrate with existing tools, such as Tableau, and frameworks like Hibernate or Spring Data;

4. Support for ACID-transactions is required if consistency is a must for specific operations;

5. Distributed computing, streaming data processing, machine learning - you can quickly implement many new business scenarios for use that generate dividends.

The Apache Ignite cluster takes in Apache Cassandra's data that needs to be queried and enables write-through mode ensuring that all the changes are written back to Cassandra. Next, having the data in Ignite, we are free to use SQL, run transactions and benefit from in-memory speed..

Furthermore, the data can be analyzed in real time with visualization tools like Tableau.

Setting Up

Next, I'll give an example of a simple "synthetic" integration of Apache Cassandra and Apache Ignite to show how it works and that it's feasible to achieve.

First I create the necessary tables in Cassandra and fill them with data, then I initialize the Java project and write DTO classes, and then I show the central part - configuring Apache Ignite to work with Cassandra.

I will use Mac OS Sierra, Cassandra 3.10 and Apache Ignite 2.3. In Linux, the commands should be similar.

Cassandra: tables and data

First, load the Cassandra distribution into the ~ / Downloads directory and navigate to the directory to unpack the archive:

Run Cassandra with default settings, for testing this will be enough.

Next, run the interactive shell Cassandra and create the test data structures. We will choose the usual surrogate id as the key - for tables in Cassandra it often makes sense to pick keys that are more meaningful regarding subsequent data extraction, but we simplify the example:

Let's check that all the data has been recorded correctly:

cqlsh:ignitetest> SELECT * FROM catalog_category;

id | description | name | parentId

----+--------------------------------------------+--------------------+-----------

1 | Appliances for households! | Appliances | null

2 | The best fridges we have! | Refrigirators | 1

3 | Engineered for exceptional usage! | Washing machines | 1

(3 rows)

cqlsh:ignitetest> SELECT * FROM catalog_good;

id | categoryId | description | name | oldPrice | price

----+-------------+---------------------------+----------------------+-----------+--------

1 | 2 | Best fridge of 2027! | Fridge Buzzword | null | 1000

2 | 2 | The cheapest offer! | Fridge Foobar | 900 | 300

4 | 3 | Washes, squeezes, dries! | Appliance Habr# | null | 10000

3 | 2 | Premium fridge in your kitchen! | Fridge Barbaz | 300000 | 500000

(4 rows)

Initializing Java Project

There are two ways to work with Ignite: you can download the distribution kit from ignite.apache.org , add necessary Jar files with your classes and XML with the configuration, or use Ignite as a maven dependency of your Java project. In this article, we will go for the second option.

We will create a new project using Maven and add the following libraries:

- `ignite-cassandra-store` for integration with Cassandra;

- `ignite-spring` to set up Ignite with Spring XML configuration.

Both libs depend on `ignite-core`, which includes core functionality of Apache Ignite, and will load it:

Next, you need to create DTO classes that will represent Cassandra tables:

We mark the @QuerySqlField annotation with those fields that will be queried with Ignite SQL. If a field is not marked with this annotation, it can not be extracted by means of SQL or filtered by it.

You can also make more fine-tuning for defining indexes and full-text indexes that go beyond the scope of this example. More information about setting up SQL in Apache Ignite can be found in the corresponding section of the documentation.

Configuring Apache Ignite

Let's create our configuration in `src / main / resources` naming as `apacheignite-cassandra.xml` file. Here is the full configuration and its crucial parts are explained later in this post.

The configuration can be divided into two main sections. First, is the definition of a DataSource to establish a connection with Cassandra and, second, the definition of the Apache Ignite settings.

The first part of the configuration is concise:

We define the Cassandra data source with an IP address to use for the connection.

Next, we configure Apache Ignite. In our example, there will be a minimal deviation from the default settings, so we only override the property `cacheConfiguration` that will contain a list of Ignite caches that are mapped to Cassandra tables:

The first cache is mapped to Cassandra's `catalog_category` table:

For every cache and table combination we enable read-through/write-through modes. For instance, if something is written to Ignite, then Ignite will send an update to Cassandra automatically.

As the next step, we specify that the `catalog_category` schema will be used in Ignite SQL:

Finally, let's establish a connection with Cassandra. There will be two main subsections. First, we'll point out a link to the previously created DataSource and, secondly, we will need to set how to relate the Cassandra tables and Ignite caches.

This will be done through a property persistenceSettingsin which it is better to refer to an external XML file with the configuration of the mapping, but for simplicity, we'll embed this XML directly into the Spring configuration as a CDATA element:

The configuration of the mapping looks quite intuitively clear:

At the top level (tag persistence) is indicated Keyspace(IgniteTest in this case) and Table( catalog_category), which we will correlate. Then it is indicated that the key of the Ignite-cache will be Long, which is primitive and should be correlated with the id column in the Cassandra table. In this case, the value is the class CatalogCategorythat should be formed with the help of Reflection( stategy="POJO") from the columns of the Cassandra table.

More detailed settings for the mapping, which are beyond the scope of this example, can be found in the corresponding section of the documentation.

The configuration of the second cache containing the product data is the same.

Launching

To start, create a class com.gridgain.test.Starter:

Here we use Ignition.start(...)method to launch an Apache Ignite node and `igntie.cache(...).loadCache(null)` methods to preload data from Cassandra to Ignite.

SQL

Once an Ignite cluster is started and interconnected with Cassandra, we can execute Ignite SQL queries. For instance, we can use any client that supports JDBC or ODBC. In our case let's use SquirrelSQL adding Apache Ignite JDBC driver to the tool first:

Create a new connection using the URL in the form jdbc: ignite: // localhost / CatalogGood, where localhost - the address of one of the nodes Apache Ignite, and CatalogGood - the cache to which the default requests will go.

Finally, let's run several SQL queries:

| goodName | goodPrice | category | parentCategory |

|---|---|---|---|

| Fridge Buzzword | 1000 | Refrigerators | Appliances |

| Fridge Foobar | 300 | Refrigerators | Appliances |

| Fridge Barbaz | 500,000 | Refrigerators | Appliances |

| Appliance Habr # | 10000 | Washing machines | Appliances |

| name | avgPrice |

|---|---|

| Refrigerators | 650 |

| Washing machines | 10000 |

Conclusion

In this simple example, we've shown how to bring SQL capabilities with the speed of RAM to existing Cassandra deployments by backing up on Ignite. So, if you are struggling with any Cassandra limitations described in this article, try out Ignite as a possible solution for them.